Are we walking blindfold into artificial intelligence?

10th April 2017 | By Grainne |

The Impact of Artificial Intelligence and the Results of Visitor Voting

I visited the Humans Need Not Apply exhibition at the Science Gallery in Trinity College Dublin recently. The exhibition explores the possible impact of Artificial Intelligence (AI) on human lifestyles and the nature of work. As part of the exhibition the gallery has asked visitors to vote on the likelihood and desirability of AI being utilized in three different scenarios.

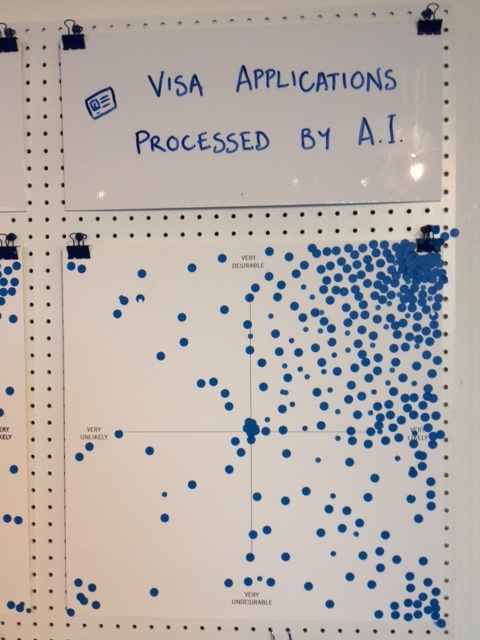

Votes were cast by visitors placing a blue sticker on a four quadrant scatter graph. The N/S axis is Very Desirable/Very Undesirable and the W/E axis is Very Unlikely/Very Likely. The one pictured above gave me pause for thought. As you can see the votes cluster strongly around very desirable and very likely to happen for use of AI in Visa Applications.

Is this an Issue?

So why did this bother me? My first response was that the processing of visa applications is too ‘human’ in context to be delivered by machine. How is the individual’s human story to be condensed into mere rules to be processed by machine? Instinctively it felt wrong. Why do lots of people think this a good idea? Well it’s possible that people see it as a convenient and efficient way of processing applications and getting rid of backlogs. It’s worth noting that I visited about six weeks after Trump’s initial travel ban and it was still very much in the news. The exhibition had been open five weeks so the travel ban and related discussions around movement of people had been current for all the time that people could have voted.

I discussed the issue with a small group mainly made up of fairly recent Trinity MBA graduates. Their view (and most of them would have had to get visas to travel to Ireland to study so they are the voice of experience) was that all visa applications are decided based on a set of rules regardless of who (or what) is making the decision. Even refugees will have their refugee status attached to visa applications so the human story has been dealt with earlier in the process. A CSR specialist of my acquaintance reacted as I had when I showed her the graphs.

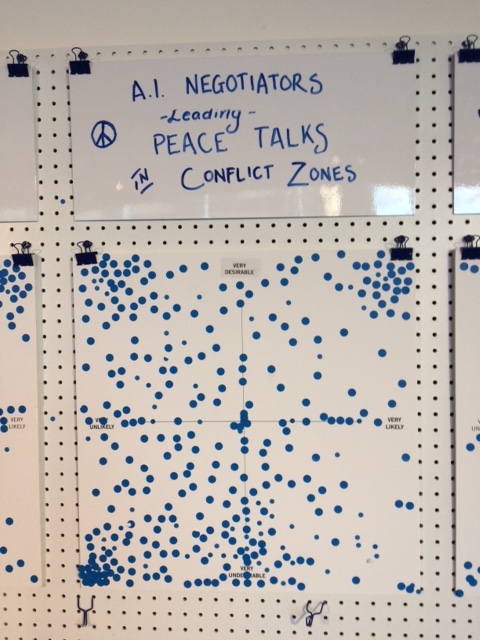

The graph asking about AI negotiators leading peace talks in conflict zones was more widely scattered but surely this is a truly human and emotional activity in which there are few rules so it worried me that there was not a more definite leaning towards the Undesirable and Unlikely quadrant.

Why is this Important?

Why is this important? Well, many academics and researchers in the area of innovation and technology ethics (Groves et al 2011, Mali 2009, Sutcliffe 2011, UNESCO 2006) have highlighted the need for citizen involvement and stakeholder consultation during the development of new technology. Scientists are seen as overly focused on what the technology can do and in stretching it to the limits rather than as safe hands to consider the societal consequences of what they are inventing.

So we need scientists and the organisations that are developing this technology to engage with a broader range of thought to consider possible consequences and implications, to make decisions about the kind of society we want and how technology should be employed. This implies we also need to educate citizens in ethical reasoning and analytical thinking. We should remember that those who voted in the Science Gallery were presumably those engaged enough to have visited an exhibition on Artificial Intelligence in the first place so in that sense they have self-selected as having an interest in the topic. A broader based sample of the population would likely be less well informed. So where do we find the civil society representatives to make these far reaching recommendations?

The exhibition continues until 14th May 2017 and is well worth visiting. Permission to use the images given by the Science Gallery

References

Groves, C., Frater, L., Lee, R. & Stokes, E. 2011 Is There Room at the Bottom for CSR? Corporate Social Responsibility and Nanotechnology in the UK Journal of Business Ethics Vol 101 Issue 4

Mali, F. 2009. Bringing converging technologies closer to civil society: the role of the precautionary principle Innovation – The European Journal of Social Science Research Vol.22, No 1

Sutcliffe, Hilary 2011 A Report on Responsible Research & Innovation. http://ec.europa.eu/research/science-society/document_library/pdf_06/rri-report-hilary-sutcliffe_en.pdf [accessed 7th April 2017]

UNESCO 2006 The Ethics and Politics of Nanotechnology

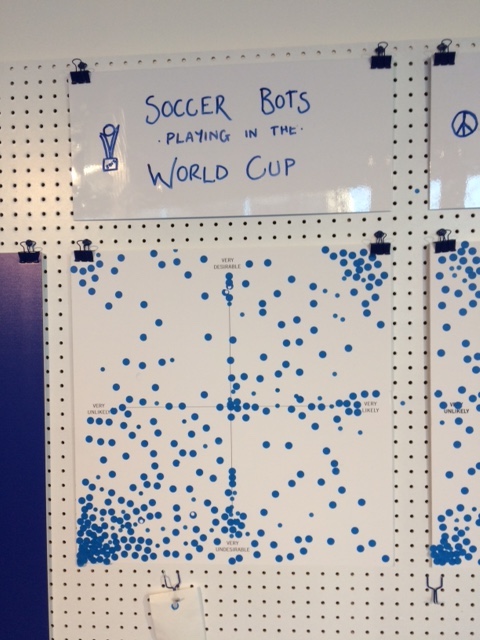

P.S. Sports fans will be relieved to see that it was considered far less likely and less desirable that soccer bots will play in the World Cup.